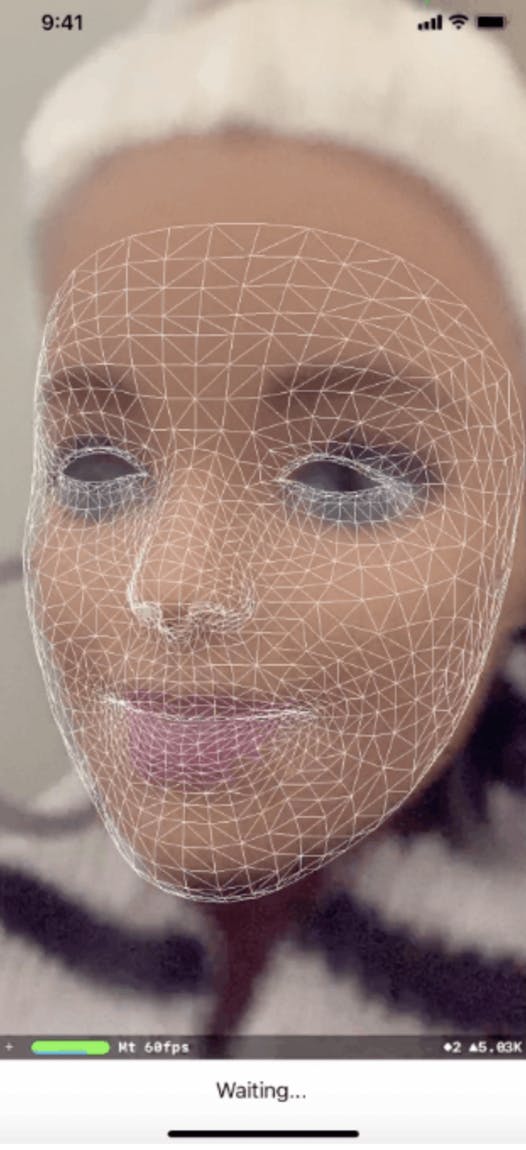

An AR app in iOS for recognizing face

I would like to introduce making a second AR app in iOS for people who already know ‘UIKit’. The tech used in this app is to recognize face. In this app, there are no additional media resources for preparing the environment of AR.

The behavior of this app is below. Whenever this app recognizes face, the app analyses expression of face and shows the result message which is one of 5 messages following:

- ‘Waiting’

- ‘You are smiling’

- ‘Your cheeks are puffed’

- ‘Don't stick your tongue out!’

- ‘Your mouth is weird!, Are you flirting?’

Let’s start it.

The work-through can be categorized into 3 steps below.

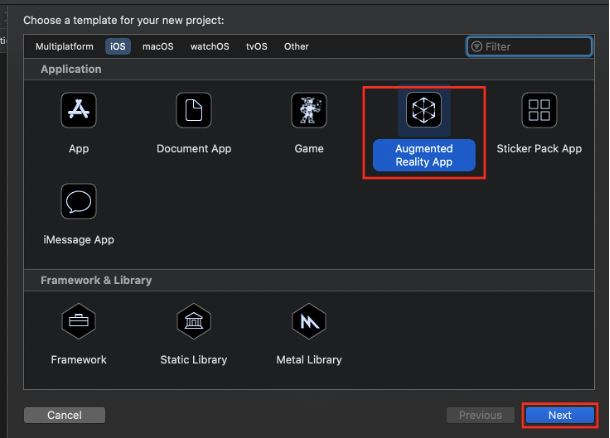

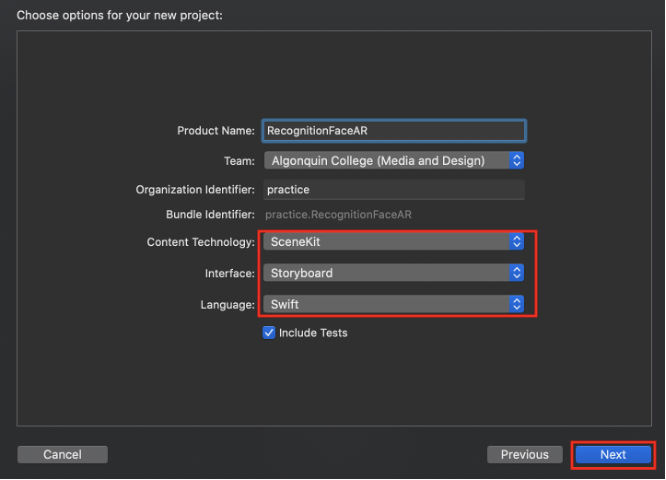

- Step1: To create a new project with the Augmented Reality App template.

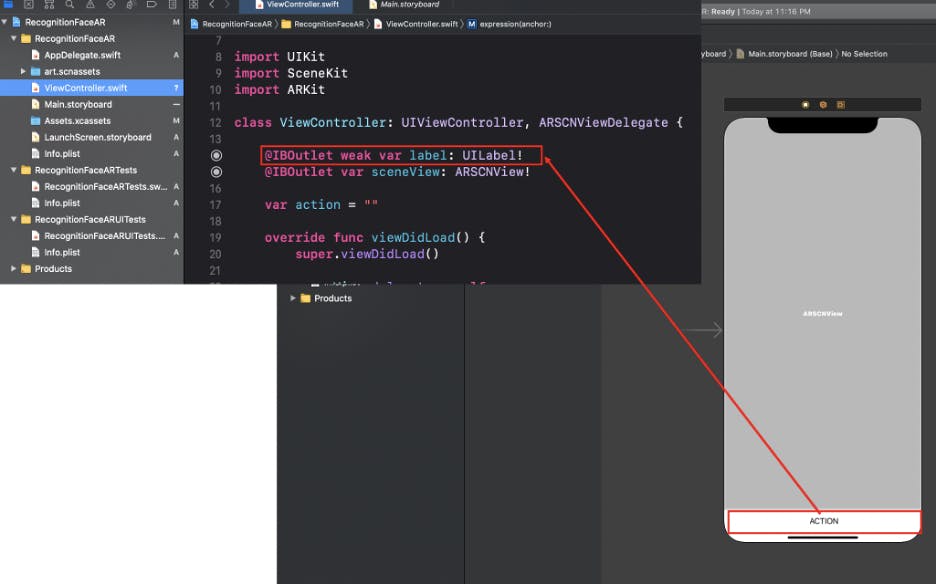

- Step2: To add a label in the storyboard and add an outlet variable of the label to ‘ViewController.swift’.

- Step3: To implement codes to recognize face, analyze its expression, and show the result.

[Step1: To create a new project with the Augmented Reality App template]

[Step2: To add a label in the storyboard and add an outlet variable of the label to ‘ViewController.swift’]

[Step3: To implement codes to recognize face, analyze its expression, and show the result]

Below is the flow of code

(1) In the ‘viewWillAppear’ method, ‘ARFaceTrackingConfiguration’ is instantiated, and ‘sceneView’ is activated with the instance of ‘ARFaceTrackingConfiguration’.

override func viewWillAppear(_ animated: Bool) {

super.viewWillAppear(animated)

// Create a session configuration

let configuration = ARFaceTrackingConfiguration()

// Run the view's session

sceneView.session.run(configuration)

}

(2) In the ’renderer(_:nodeFor)’ method, ‘faceMesh’ is initialized.

func renderer(_ renderer: SCNSceneRenderer, nodeFor anchor: ARAnchor) -> SCNNode? {

let faceMesh = ARSCNFaceGeometry(device: sceneView.device!)

let node = SCNNode(geometry: faceMesh)

node.geometry?.firstMaterial?.fillMode = .lines

return node

}

(3) In the ’renderer(:didUpadate:for)’ method, updating ‘faceMesh’ caused by the change of user's face is detected. this method asks the ‘expression(anchor)’ method what expression is. And then the ’renderer(:didUpadate:for)’ method shows the expression by using the label of the storyboard.

func renderer(_ renderer: SCNSceneRenderer, didUpdate node: SCNNode, for anchor: ARAnchor) {

if let faceAnchor = anchor as? ARFaceAnchor, let faceGeometry = node.geometry as? ARSCNFaceGeometry {

faceGeometry.update(from: faceAnchor.geometry)

expression(anchor: faceAnchor)

DispatchQueue.main.async {

self.label.text = self.action

}

}

}

(4) In the ‘expression(anchor)’ method, ‘faceMesh’ updated is analyzed, and one message describing face’s expression is chosen. And then, the ‘expression(anchor)’ method sets the global variable as the message chosen.

func expression(anchor: ARFaceAnchor) {

let mouthSmileLeft = anchor.blendShapes[.mouthSmileLeft]

let mouthSmileRight = anchor.blendShapes[.mouthSmileRight]

let cheekPuff = anchor.blendShapes[.cheekPuff]

let tongueOut = anchor.blendShapes[.tongueOut]

let jawLeft = anchor.blendShapes[.jawLeft]

let eyeSquintLeft = anchor.blendShapes[.eyeSquintLeft]

self.action = "Waiting..."

if ((mouthSmileLeft?.decimalValue ?? 0.0) + (mouthSmileRight?.decimalValue ?? 0.0)) > 0.9 {

self.action = "You are smiling. "

}

if cheekPuff?.decimalValue ?? 0.0 > 0.05 {

self.action = "Your cheeks are puffed. "

}

if tongueOut?.decimalValue ?? 0.0 > 0.05 {

self.action = "Don't stick your tongue out! "

}

if jawLeft?.decimalValue ?? 0.0 > 0.05 {

self.action = "You mouth is weird!"

}

if eyeSquintLeft?.decimalValue ?? 0.0 > 0.05 {

self.action = "Are you flirting?"

}

}

The whole sources can be found below. Thanks Professor Vladimir for sharing the sources. I hope it will help us enjoy AR programming in iOS.